by Elizabeth Hines | Jul 16, 2015 | Blog, Strategy

Remember the days when a rear-view mirror was all we needed to make business decisions? Now, predictive analytics appears poised to turn hindsight into a relic of the past.

Two Gartner analysts echo that sentiment, stating, “Few technology areas will have greater potential to improve the financial performance and position of a commercial global enterprise than predictive analytics.”

Executives are eager to jump on the bandwagon too. Although only 13% of 250 executives surveyed by Accenture said they use big data primarily for predictive purposes, as many as 88% indicated big data analytics is a top priority for their company. With an increasing number of companies learning to master the precursors to developing predictive models — namely, connecting, monitoring, and analyzing — we can safely assume the art of gleaning business intelligence from foresight will continue to grow.

Amid the promises of predictive analytics, however, we also find a number of pitfalls. Some experts caution there are situations when predictive analytics techniques can prove inadequate, if not useless.

Let’s consider three examples:

- Predictive analytics works well in a stable environment in which the future of the business is likely to resemble its past and present. But Harvard Business School professor Clayton Christensen points out that in the event of a major disruption the past will do a poor job of foreshadowing future events. As an example, he cites the advent of PCs and commodity servers, arguing computer vendors who specialized in minicomputers in the 1980s couldn’t possibly have predicted their sales impact, since they were innovations and there was no data to analyze.

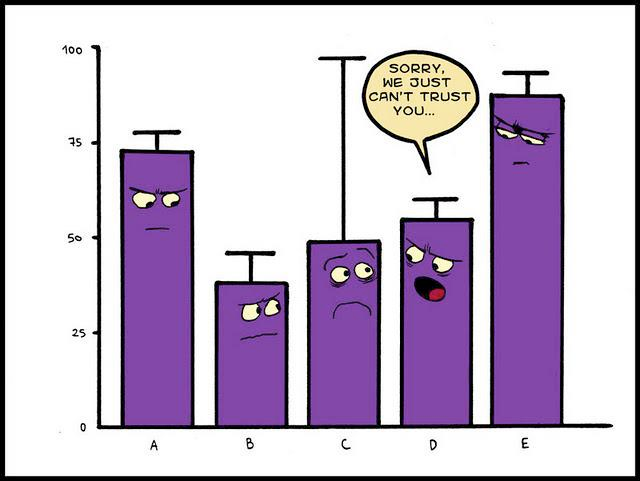

- Bias in favor of a positive result is another danger when interpreting data; One of the most common errors in predictive analytics projects. Speaking at the 2014 Predictive Analytics World conference in Boston, John Elder, president of consulting firm Elder Research, Inc., made a good point when he noted that people “‘often look for data to justify our decisions, when it should be the other way around.”

- Mining big data will further do little good if the insights are not directly tied to an operational process. I’ve a feeling more companies than we realize are wasting precious time and manpower on big data projects that are not adequately understood, producing trivia rather than actionable business intelligence.

With the above challenges in mind, talent acquisition and thorough A/B tests will be key components of any predictive analytics project. What else do you think organizations need to do to use foresight effectively?

You may also be interested in:

Fronetics Strategic Advisors is a leading management consulting firm. Our firm works with companies to identify and execute strategies for growth and value creation.

Whether it is a wholesale food distributor seeking guidance on how to define and execute corporate strategy; a telematics firm needing high quality content on a consistent basis; a real estate firm looking for a marketing partner; or a supply chain firm in need of interim management, our clients rely on Fronetics to help them navigate through critical junctures, meet their toughest challenges, and take advantage of opportunities. We deliver high-impact results.

We advise and work with companies on their most critical issues and opportunities: strategy, marketing, organization, talent acquisition, performance management, and M&A support.

We have deep expertise and a proven track record in a broad range of industries including: supply chain, real estate, software, and logistics.

by Elizabeth Hines | Apr 6, 2015 | Big Data, Blog, Data/Analytics

Concurrent with the extraordinary rise of the Internet of Things (IoT), predictive analytics are gaining in popularity. With an increasing number of companies learning to master the precursors to developing predictive models — namely, connecting, monitoring, and analyzing — we can safely assume the art of gleaning business intelligence from foresight will continue to grow rapidly.

Indeed, Gartner analysts put forward that “few technology areas will have greater potential to improve the financial performance and position of a commercial global enterprise than predictive analytics.” And executives seem eager to jump on the bandwagon; in a survey of executives conducted by Accenture a full 88% indicated big data analytics is a top priority for their company. Amid the promises of predictive analytics, however, we also find a number of pitfalls. Some experts caution there are situations when predictive analytics techniques can prove inadequate, if not useless.

Here, we dissect three problems most commonly encountered by companies employing predictive analytics.

Past as a poor predictor of the future

The concept of predictive analytics is predicated on the assumption that future behavior can be more or less determined by examining past behaviors; that is, predictive analytics works well in a stable environment in which the future of the business is likely to resemble its past and present. But Harvard Business School professor Clayton Christensen points out that in the event of a major disruption, the past will do a poor job of foreshadowing future events. As an example, he cites the advent of PCs and commodity servers, arguing computer vendors who specialized in minicomputers in the 1980s couldn’t possibly have predicted their sales impact, since they were innovations and there was no data to analyze.

Interpreting bias

Consider also the bias in favor of a positive result when interpreting data for predictive purposes; it is one of the most common errors in predictive analytics projects. Speaking at the 2014 Predictive Analytics World conference in Boston, John Elder, president of consulting firm Elder Research, Inc., made a good point when he noted that people “‘often look for data to justify our decisions,’ when it should be the other way around.”

Collecting and analyzing unhelpful or superfluous data

Failure to tie data efforts to operational processes can lead to an unnecessary drain of staff resources. Mining big data will do little good if the insights are not directly tied to functional procedures. More companies than we probably realize are wasting precious time and manpower on big data projects that are not adequately understood, producing trivia rather than actionable business intelligence.

To overcome these common pitfalls of predictive analytics, spend some time reviewing the sources of your data and the basic assumptions on which your predictive analytics projects are based. Because the major principle of predictive analytics is that the past behavior can forecast future behavior, keep your ear to the ground for growing industry trends or any other factors that might influence consumer behavior. Plan to revisit the source of your data frequently to determine if the sample set is representative of your future set and should continue to be used. Most importantly, regularly evaluate how your predictive analysis relates and contributes to your company’s overall goals and objectives.

What else do you think organizations can do to ward off the snags of predictive data analysis and use foresight more effectively?

by Elizabeth Hines | Apr 6, 2015 | Big Data, Blog, Data/Analytics

Concurrent with the extraordinary rise of the Internet of Things (IoT), predictive analytics are gaining in popularity. With an increasing number of companies learning to master the precursors to developing predictive models — namely, connecting, monitoring, and analyzing — we can safely assume the art of gleaning business intelligence from foresight will continue to grow rapidly.

Indeed, Gartner analysts put forward that “few technology areas will have greater potential to improve the financial performance and position of a commercial global enterprise than predictive analytics.” And executives seem eager to jump on the bandwagon; in a survey of executives conducted by Accenture a full 88% indicated big data analytics is a top priority for their company. Amid the promises of predictive analytics, however, we also find a number of pitfalls. Some experts caution there are situations when predictive analytics techniques can prove inadequate, if not useless.

Here, we dissect three problems most commonly encountered by companies employing predictive analytics.

Past as a poor predictor of the future

The concept of predictive analytics is predicated on the assumption that future behavior can be more or less determined by examining past behaviors; that is, predictive analytics works well in a stable environment in which the future of the business is likely to resemble its past and present. But Harvard Business School professor Clayton Christensen points out that in the event of a major disruption, the past will do a poor job of foreshadowing future events. As an example, he cites the advent of PCs and commodity servers, arguing computer vendors who specialized in minicomputers in the 1980s couldn’t possibly have predicted their sales impact, since they were innovations and there was no data to analyze.

Interpreting bias

Consider also the bias in favor of a positive result when interpreting data for predictive purposes; it is one of the most common errors in predictive analytics projects. Speaking at the 2014 Predictive Analytics World conference in Boston, John Elder, president of consulting firm Elder Research, Inc., made a good point when he noted that people “‘often look for data to justify our decisions,’ when it should be the other way around.”

Collecting and analyzing unhelpful or superfluous data

Failure to tie data efforts to operational processes can lead to an unnecessary drain of staff resources. Mining big data will do little good if the insights are not directly tied to functional procedures. More companies than we probably realize are wasting precious time and manpower on big data projects that are not adequately understood, producing trivia rather than actionable business intelligence.

To overcome these common pitfalls of predictive analytics, spend some time reviewing the sources of your data and the basic assumptions on which your predictive analytics projects are based. Because the major principle of predictive analytics is that the past behavior can forecast future behavior, keep your ear to the ground for growing industry trends or any other factors that might influence consumer behavior. Plan to revisit the source of your data frequently to determine if the sample set is representative of your future set and should continue to be used. Most importantly, regularly evaluate how your predictive analysis relates and contributes to your company’s overall goals and objectives.

What else do you think organizations can do to ward off the snags of predictive data analysis and use foresight more effectively?