by Elizabeth Hines | Sep 1, 2015 | Big Data, Blog, Data/Analytics, Strategy, Supply Chain

Many organizations that jumped on the big data bandwagon have struggled to turn their new, boundless collection of data into actionable business information. As consultant Rich Sherman of Athena IT Solutions puts it, today’s businesses are struggling with “the transformation of data into information that is comprehensive, consistent, correct and current.”

Many organizations that jumped on the big data bandwagon have struggled to turn their new, boundless collection of data into actionable business information. As consultant Rich Sherman of Athena IT Solutions puts it, today’s businesses are struggling with “the transformation of data into information that is comprehensive, consistent, correct and current.”

Enter data governance programs, and, consequently, the data steward. Technology cannot always extract the most useful data, but the data stewards can, meaning the success of the data governance program rests to a large degree on their shoulders. But data stewards can be viewed with suspicion by other employees and nothing can kill the spirit of hard work more than distrust or a feeling that management is snooping and ready to pounce at the sign of even the slightest misstep.

Some business users are under the impression data stewards have been tasked to play dual roles, championing the organization’s data governance program while also using their position to crack down on anyone stepping out of line. To some, the data steward is really a data cop who “police” rather than manage the organization’s critical data elements. They believe anyone can be caught in the net cast by the data stewards as they fish for out-of-compliance data and bring it into line with policy or regulatory obligations. This can create needless friction within the organization and threaten the effectiveness of data efforts altogether. We can’t ignore concerns caused by the growing presence of data stewards at many organizations; in fact, it makes it even more important to show why such concerns are generally unfounded.

While it is true data stewards are indeed tasked with ensuring compliance with the policies and processes of the data governance program, critics need to bear the end goal in mind – to turn massive amounts of data into a useable corporate asset. And data stewards themselves can actually play a role in the effort to help “to take the view of data governance from police action to harmonious collaboration,” as another expert, Anne Marie Smith of Alabama Yankee Systems, LLC, put it. It won’t hurt for the data stewards – or the data governance managers – to acknowledge some employees will initially question their intentions. However, by reaching out to each business unit and explaining how data governance works and why improved data management will benefit the organization, the distrust can dissipate. Similarly, if the data steward is recruited from within the organization, it will alleviate some concerns since business users are more likely to trust a familiar face.

The complexity of data governance comes with a host of pitfalls – fears of data cops shouldn’t be one of them. What’s been your experience with data stewards in the supply chain? Do they play an important role in your organization?

![Drowning in big data, parched for information [Infographic]](https://fronetics.com/wp-content/uploads/2024/10/big-data-2-1000x675.jpg)

by Fronetics | Aug 24, 2015 | Big Data, Blog, Data/Analytics, Strategy

Big data is big. Over the past two years alone more than 90% of the world’s data has been created. Each day more than 2.5 quintillion bytes of data are created. For those who are more numerically inclined that is more than 2,500,000,000,000,000,000 bytes per day.

Companies are spending big money to determine how they can harness the power of big data and drive actionable, practical, and profitable results. The International Data Corporation (IDC) recently forecasted that the Big Data technology and services market will grow at a 26.4% compound annual growth rate to $41.5 billion through 2018, or about six times the growth rate of the overall information technology market.

Weatherhead University Professor Gary King notes that: “There is a big data revolution, but it is not the quantity of data that is revolutionary. The big data revolution is that now we can do something with the data.” Herein lies the problem. Even as the quantity of big data being generated increases, and even as the money spent on big data increases, the majority of companies find themselves struggling to do something with the data. Companies are drowning in data while at the time being parched for information.

KPMG recently conducted a survey of 144 CFOs and CIOs with the objective of gaining a more concrete understanding of the opportunities and challenges that big data and analytics present. The survey found that 99% of respondents believe that data and analytics are at least somewhat important to their business strategies; 69% consider them to be crucially or very important. Despite the perceived value of big data, 85% of respondents reported that they don’t know to analyze and interpret the data they already have in hand (much less what to do with forthcoming data).

Moreover, 96% of survey respondents reported that the data being left on the table has untapped benefits. 56% of respondents believe the untapped benefits could be significant.

Research conducted by Andrew McAfee, co-director of the Initiative on the Digital Economy in the MIT Sloan School of Management, supports this belief. McAfee’s research found that “the more companies characterized themselves as data-driven, the better they performed on objective measures of financial and operational results.” Specifically, “companies in the top third of their industry in the use of data-driven decision making were, on average, 5% more productive and 6% more profitable than their competitors.”

Looking forward, companies that are able to effectively collect, analyze, and interpret data will gain a competitive advantage over those companies who are not able to do so.

by Elizabeth Hines | Jul 13, 2015 | Big Data, Blog, Data/Analytics, Strategy, Supply Chain

Analytics is good for business — as long as you can make sense of it.

Does your business suffer from a case of data overload? Or do you steer clear of new investments in supply chain analytics because you are afraid they could yield more data than your business can handle? You are in good company.

Several recent surveys indicate companies either are wary of advanced analytics tools or say they have failed to leverage the technology. The issue does not seem to be a lack of knowledge of its existence or potential impact — end users are generally well informed — but how to absorb the data effectively and apply it across the entire organization.

According to a Telematics Update, for example, vendors would be wise to spend less time on their sales pitch and more time presenting the data in a digestible format, ensuring compatibility with the end user’s legacy systems, and aligning the solution with the end-user’s key performance indicators.

The challenge is also captured in an Accenture survey in which only one in five companies said they are “very satisfied” with the returns they have received from analytics. And it’s not for lack of trying. Two-thirds of companies have appointed a chief data officer in the last 18 months to oversee data management and analytics, while 71 percent of those who have not created such a position plan to do so in the near future.

This passage from Accenture’s survey report hits the nail on the head:

Companies wanting to compete more aggressively with analytics will move rapidly to industrialize the discipline on an enterprise-wide scale, redesigning how fact-based insights get embedded into key processes, leading to smarter decisions and better business outcomes.

Most organizations measure too many things that don’t matter, and don’t put sufficient focus on those things that do, establishing a large set of metrics, but often lacking a causal mapping of the key drivers of their business.

As the survey suggests, the move away from an isolated approach to an integrated cross-functional model may be the key to squeezing the most out of supply chain analytics. According to Deloitte, the key to delivering strategic insights is creating asingle authoritative data set from which all business units can draw information.

However, only 33% of the Accenture survey respondents said they are “aggressively using analytics across the entire enterprise.” Instead, highly customized data is often collected for units within the organization. A spending forecast by procurement may look nothing like its counterpart coming out of logistics. The inconsistency in reporting makes it hard to share the knowledge, and that takes us back to square one: lots of data and little useful information.

Jerry O’Dwyer, a principal with Deloitte Consulting, summed it up this way in a 2012 post:

If you are performing analytics in different areas of the supply chain — for example, spend analytics or demand planning — you may be missing opportunities that an expansive approach can yield. For companies of all kinds, in-depth supply chain analysis offers an opportunity to create increased value throughout their operations.

Let’s hear it: What do you think companies need to do to put analytics to effective use?

Fronetics Strategic Advisors is a leading management consulting firm. Our firm works with companies to identify and execute strategies for growth and value creation.

Whether it is a wholesale food distributor seeking guidance on how to define and execute corporate strategy; a telematics firm needing high quality content on a consistent basis; a real estate firm looking for a marketing partner; or a supply chain firm in need of interim management, our clients rely on Fronetics to help them navigate through critical junctures, meet their toughest challenges, and take advantage of opportunities. We deliver high-impact results.

We advise and work with companies on their most critical issues and opportunities: strategy, marketing, organization, talent acquisition, performance management, and M&A support.

We have deep expertise and a proven track record in a broad range of industries including: supply chain, real estate, software, and logistics.

by Fronetics | Jun 25, 2015 | Big Data, Blog, Data/Analytics, Internet of Things, Marketing

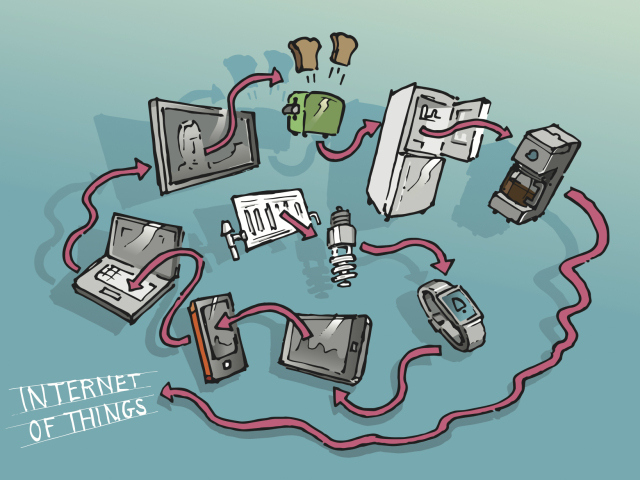

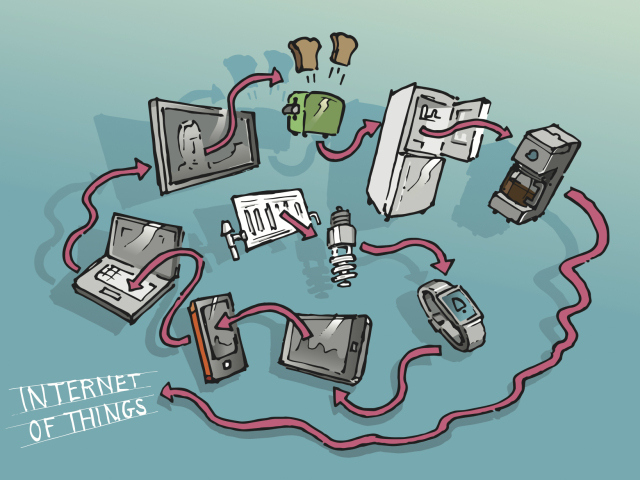

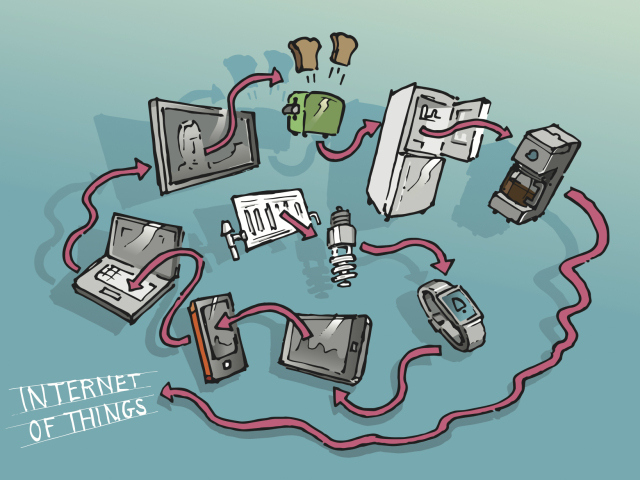

From coffee makers to urban design, the Internet of Things (IoT) is affecting change in virtually all aspects of daily life. And even though the IoT is still at the early-adopter stage, in just five years 50 billion devices are projected to be connected to the Internet, generating an estimated $2 trillion to $14 trillion in value. Expectations are running high, so high, in fact, that Gartner ranked IoT at the top of its 2014 Hype Cycle for Emerging Technologies.

Companies are naturally eager to get a piece of the action with as many as three out of four exploring how IoT technologies could fit into their business operations. Too many times, however, companies end up with mountains of data and no actionable information. Entry into the IoT should come with warnings: analyze the data or prepare to be disappointed. Or, data without analytics is nothing but noise.

But unless businesses turn the focus away from pure data collection to data analysis, investments in IoT technologies are doomed to produce disappointing results. To be truly useful, the data should do more than “look pretty on a dashboard,” as Steven Sarracino, founder of Activant Capital Group, LLC, in Greenwich, Conn., pointed out.

Vendors of sensor technologies would simultaneously be wise to take their services beyond singing the virtues of amassing data to showing their clients how to make sensor-driven decisions. In fact, the need for more guidance was underscored in a fleet report by Tracking Automotive Technology: TU Automotive (previously Telematics Update). Vendors should, according to the report, present the data in a digestible format to assist overwhelmed end-users. While purely monitoring the performance of a forklift, for example, provides value, it is not until the data is analyzed and acted upon that maximum ROI is achieved. In the case of a forklift fleet, it might entail optimizing routes in the warehouse or performing preventive maintenance. As another example, the retail sector can apply analytics to data collected by security cameras and Wi-Fi beacons to help retailers understand what types of displays catch customers’ attention.

The adoption of IoT technologies will likely come easier to industries such as manufacturing and supply chain which already connect machinery and fleets with Internet-enabled sensors or devices. Smart grid technologies also hold a lot of promise for public utilities based on current industry trends, connecting countless data points for continuous monitoring and proactive management of the power supply. However, until companies are able to adequately apply analytics to squeeze value out of their investments, it may be a while before IoT technologies reach critical mass.

by Jennifer Hart Yim | May 12, 2015 | Big Data, Blog, Data/Analytics, Strategy

This article is part of a series of articles written by MBA students and graduates from the University of New Hampshire Peter T. Paul College of Business and Economics.

Josh Hutchins received his B.S. in Business Administration from the University of New Hampshire in 2005. He is currently pursuing his MBA at the Peter T. Paul School at the University of New Hampshire; on course to graduate in May 2016.

“Working with data is something I do every day as a financial analyst. I enjoy crunching the data and experimenting with various data analytic techniques. I’ve found that my love for playing with the data and thinking in unconventional ways has led me to be efficient and successful at work. The way data is being used is revolutionizing the way we do business. I’m glad I can be part of this wave of the future.”

The responsibility of big data

Data is coming into businesses at incredible speeds, in large quantities, and in all types of different formats. In a world full of big data it’s not just having the data – it’s what you do with it that matters. Big data analysis is becoming a very powerful tool used by companies of all sizes. Companies are analyzing and using the data in order to create sustainable business models and gain a competitive advantage over their competition. However, as one company would come to learn – with big data comes big responsibility.

Solid Gold Bomb T-Shirt Company

In 2011, Solid Gold Bomb, an Amazon Marketplace merchant based out of Australia, thought of an ingenious way to create fresh slogans for t-shirts. The main concept behind Solid Gold Bomb’s operation was that by utilizing a computer programming script, they could create clever t-shirt slogans that no one had thought of previously. The company created various t-shirt slogans that played off of the popular British WWII era phrase Keep Calm and Carry On. Under the systematic script method, Solid Gold Bombs was able to create literally millions of t-shirt offerings without the need to have them on hand in inventory. With the substantial increase in product offerings, the chances of customers stumbling upon a Solid Gold Bomb shirt increased dramatically. By utilizing this new on-demand approach to t-shirt printing, Solid Gold Bomb was able to reduce expenses, while simultaneously increasing potential revenue by offering exponential products at little additional marginal cost.

Use of ‘Big Data’

The computer script relied on the following to operate: a large pool of words, associated rule learning, and an algorithm.

Large Pool of Words – Solid Gold Bomb gathered a list of approximately 202,000 words that could be found in the dictionary. Of these words, they whittled it down to approximately 1,100 of the most popular words. Some of the words were too long to be included on a t-shirt, so the list was further culled. They settled on 700 different verbs and corresponding pronouns. These words would be used by the computer script to generate t-shirt slogans.

Associated rule learning – Associated rule learning is the degree to which two variables in a given list relate to each other. The first step in associated rule learning is to identify and isolate the most frequent variables. The second step is forming rules based on different constraints on the variables – assigning an “interestingness” factor. In the case of Solid Gold Bomb, the associated rule learning assigned an interestingness factor to verb-pronoun combinations.

Algorithm – The algorithm designed by Solid Gold Bomb was very simple. Each shirt would begin with “Keep calm and”. The algorithm script would then search through the word pool and pull back the most highly associated verb and pronoun combinations. The words would then be put into the typical format of the Keep Calm and Carry On. An image of each individual combination would be mocked up and posted to their Amazon merchant account. The process would continuously loop, creating millions of combinations.

The Big Data Blues

With one innocent mistake, Solid Gold Bomb fell apart in the blink of an eye. Amazon started getting complaints about offensive slogans on Solid Gold Bomb’s t-shirts. Images of t-shirts with phrases such as “Keep Calm and Rape Her” and “Keep Calm and Hit Her” were being sold on their Amazon merchant account. Their typical weekend orders for around 800 shirts were reduced to just 3 – few enough to count on one hand. Amazon ended up pulling their entire line of clothing, essentially putting Solid Gold Bomb out of business.

What went wrong?

While Solid Gold Bomb had a good handle on how to use data that they had gathered and how to use it to their advantage, they neglected to consider the potential hidden consequences of unintentional misuse of the data. When culling 202,000 words down to 700 useable words, words such as “rape” should have been eliminated from the useable word pool. From a high level perspective, the human mind is incapable of naming all the potential combinations of the 700 useable words without the assistance of a computer program. However, the end user needs to be aware that the computer program logic will create every potential combination based on the word pool.

Moral of the Story

Big data by itself is not beneficial to a company. The real value is in the analytics that are applied to the data. The results of the analytics can be utilized in numerous ways – to make more informed decisions, create new revenue streams, and create competitive advantages, to name a few. When a company makes the decision to utilize big data analytics, each process needs to be mapped out to have an intimate understanding of how the data will be used. In the case of Solid Gold Bomb, they failed to have this intimate understanding of how the data would be used throughout the process. As a result, they paid the ultimate price; they were not able to sustain themselves through this debacle. The morale of the story: With big data comes big responsibility. Know your data and know the potential uses of the data better. Don’t be afraid to think outside the box, but know the potential consequences.

For information about another big data faux pas, learn how Target predicted that a 16 year old girl was pregnant before her father knew.

Many organizations that jumped on the big data bandwagon have struggled to turn their new, boundless collection of data into actionable business information. As consultant Rich Sherman of Athena IT Solutions puts it, today’s businesses are struggling with “the transformation of data into information that is comprehensive, consistent, correct and current.”

Many organizations that jumped on the big data bandwagon have struggled to turn their new, boundless collection of data into actionable business information. As consultant Rich Sherman of Athena IT Solutions puts it, today’s businesses are struggling with “the transformation of data into information that is comprehensive, consistent, correct and current.”

![Drowning in big data, parched for information [Infographic]](https://fronetics.com/wp-content/uploads/2024/10/big-data-2-1000x675.jpg)